|

|

|

CCNYM Projects

|

|

|

|

Wearable Audio Video Eye System (WAVES)

|

|

|

Based on the 2002 world population, there are more than

161 million visually impaired people in the world today, of which 37

million are blind. The goal of WAVES project is to explore and develop

computer vision-based assistive technologies for visually impaired persons

to understand the surrounding environment and to form mental

representations of that environment by using wearable sensors. Our research efforts focus on two threads: 1) computer

vision-based technology for scene understanding including context

information extraction and recognition, stationary objects detection and

recognition, moving object detection and recognition, and dynamic

environment change adaption; and 2) user interface and usability studies

including auditory display and spatial updating of object location,

orientation, and distance, and environment changes.

For

more details, please come back later.

|

|

|

Automatic

Target Detection, Tracking, and Recognition by Airborne Hyperspectral

Imagery

|

|

|

Automatic target detection, tracking, and

recognition in multispectral/hyperspectral imagery is a challenging problem

and involves several technologies such as optical sensor design,

signal/image processing, pattern recognition, and computer vision

algorithms. There are many applications of civil and military applications

include surveillance of ground, ocean, air and space. In this project, we

will also investigate how to extract reliable information from various sensing

situations and design cost-effective sensors.

For more details, please come back later.

|

|

|

Moving

Object Detection and Tracking in Challenge Environments

|

|

|

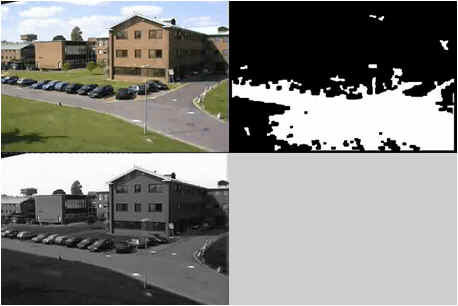

Moving object detection in environment

with quick lighting changes:

Here are some results of moving object

detection by Gaussian mixture method with (right columns) and without (left

columns) our improvement (click on the image to play demo video):

upper-left image shows the original frame, lower-left image shows the

background, and the upper-right image shows the foreground.

|

Without

our improvement

|

With

our improvement

|

|

|

|

|

|

|

|

|

|

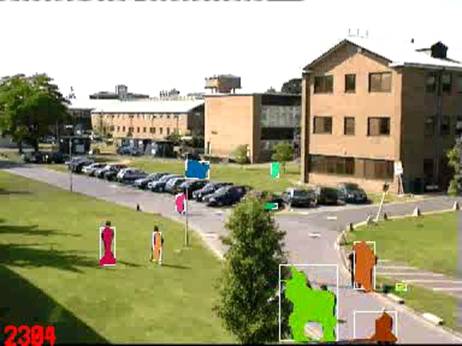

Here are more demo videos by comparing our method with

some existing methods [1, 2] to handle quick lighting changes.

Result video from method [1] (click on the image to play demo video):

Result video from method [2] (click on the image to play demo video):

The foreground blobs are displayed on the original image.

J. Connell, A.W. Senior, A.

Hampapur, Y.-L. Tian, L. Brown, and S. Pankanti, “Detection and tracking in

the IBM PeopleVision system”, in IEEE ICME, June 2004.

Y. Tian, Max Lu, and Arun Hampapur,

“Robust and Efficient Foreground Analysis for Real-time Video

Surveillance,” IEEE CVPR, San Diego. June, 2005.

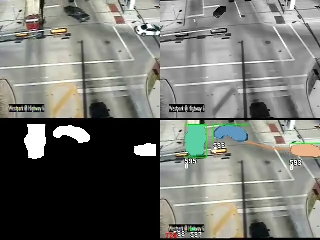

To handle slow moving/stopped objects, we create a

feedback mechanism that allows interactions between BGS and tracking in the

same framework of background subtraction. The following videos demonstrate

the BGS and tracking results with/without the interaction.

Result videos with/without BGS and Tracking

interaction (click

on the image to play demo video): upper-left: the original frame;

upper-right: background image; lower-left: foreground image; and

lower-right: tracking output.

|

Without Interaction

|

With Interaction

|

|

|

|

Back to top

|

|

|

|

|

Intelligent Video Activity Analysis

|

|

|

There are large amount data of events and activities for

intelligent video surveillance. The research will exploit the composite

event detection, association mining, pattern discovery and unusual pattern

detection by using data mining.

For

more details, please come back later.

Back to top

|

|

|

Facial

Expression Analysis in Naturalistic Environments

|

|

|

The research of facial expression analysis in naturalistic

environments will have significant impact across a range of theoretical and

applied topics. Real-life facial expression analysis must handle head

motion (both in-plane and out-of-plane), occlusion, lighting change, low

intensity expressions, low resolution input images, absence of a neutral

face for comparison, and facial actions due to speech.

For

more details, please come back later.

Back to top

|

|

|

IBM

Smart Surveillance Solutions

|

|

|

Back to top

|

|

|

|

|